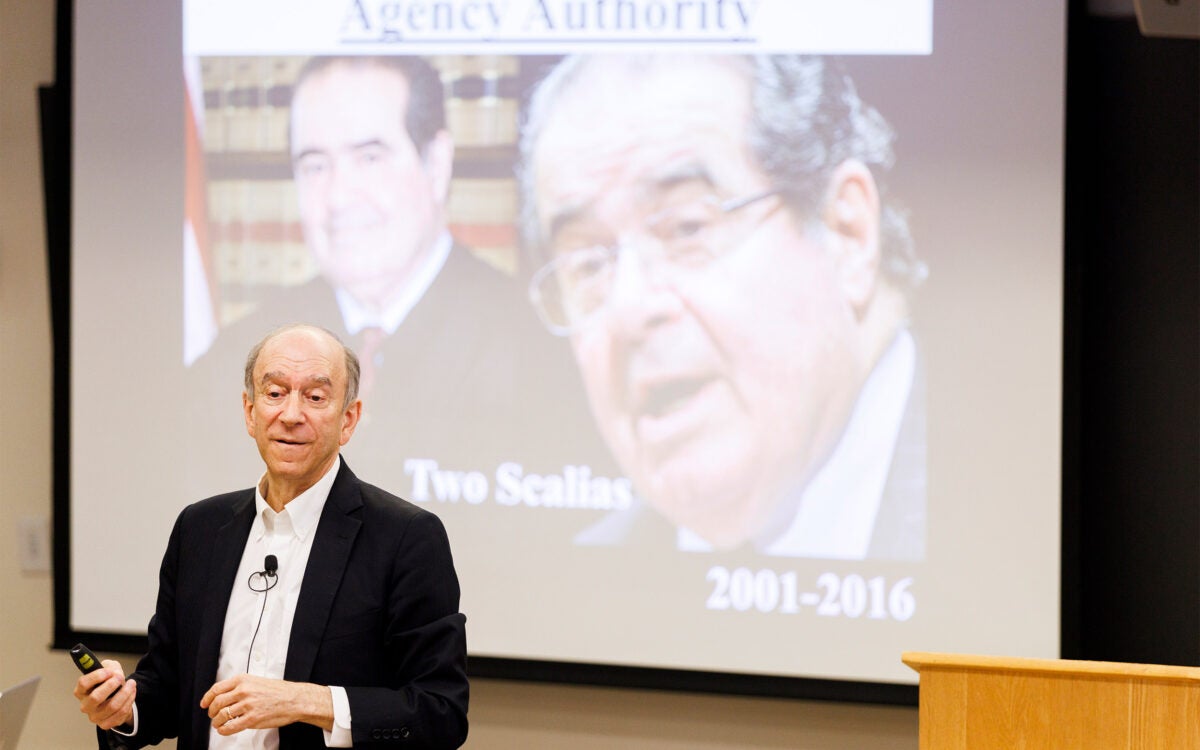

Cambridge Analytics CEO Alexander Nix (pictured) said his firm found a way to tailor political ads and messages on Facebook to millions of voters in the U.S., based on their fears and prejudices.

Photo by Christian Charisius/picture-alliance/dpa/AP

On the web, privacy in peril

Massive mining of users’ data on Facebook eats away at public’s online trust, analyst says

Innocent victim or background contributor? Facebook now faces questions from authorities on both sides of the Atlantic Ocean after news reports in The Guardian and The New York Times this week revealed that a psychologist illicitly gave data from 50 million Facebook users to a political consulting firm that tailored political ads to many users during the 2016 U.S. presidential election.

Cambridge Analytica, the firm that also advanced the Brexit referendum in Britain, is owned by hedge fund billionaire Robert Mercer and was run by Steve Bannon from 2014‒2016 before he joined Donald Trump’s presidential campaign. According to whistleblower Christopher Wylie, who helped develop the targeting software, the firm tested pro-Trump slogans such as “drain the swamp” and met with Trump’s first campaign manager, Corey Lewandowski, more than a year before Trump announced his candidacy.

In a British television exposé, Cambridge Analytica CEO Alexander Nix bragged that his firm designed and ran Trump’s digital and messaging operation and had found a way to tailor political ads and messages on Facebook to millions of U.S. voters, based in part on their fears and prejudices.

Facebook insists that its data wasn’t technically breached, but also says it is unaware how the data wound up with Cambridge Analytica. In a statement posted to his own Facebook page Wednesday, CEO Mark Zuckerberg said the company was still trying to determine what happened and pledged to better protect user data and privacy. British authorities, the U.S. Federal Trade Commission (FTC), and the attorneys general of Massachusetts, New York, and New Jersey all have opened investigations into Facebook’s handling of its users’ data.

Vivek Krishnamurthy studies international issues in internet governance as a clinical attorney at Harvard Law School’s Cyber Law Clinic. He spoke with the Gazette about the legal implications of the breach for Facebook, the laxity in U.S. privacy protections, and how Facebook’s difficulties may mark the end of the tech industry’s long deregulation honeymoon in this country.

Q&A

Vivek Krishnamurthy

GAZETTE: What’s your reaction to reports that data of at least 50 million Facebook users wound up in the hands of a political consulting firm without users’ knowledge or expressed consent?

Krishnamurthy: It’s very concerning. There are concerns around the degree of consent, there are concerns around whether users reasonably expected that this would happen, and there are concerns around uses of this kind of data by a political campaign, generally. With regard to some of the allegations as to how the data has been used, there are particular concerns with the use of this data to target people for messages that might be less than completely true — the entire problem of information quality and fake news. This scandal arose [from events] in the past, and it’s coming out now. So it may be true that this kind of thing is less likely to happen now than it was in the 2016 election cycle, but nonetheless this is seriously concerning stuff, clearly.

GAZETTE: Was this in some ways inevitable given Facebook’s consent and privacy policies prior to 2014, and perhaps, as some claim, its less-than-rigorous enforcement effort even after 2014?

Krishnamurthy: Yes, I think it’s safe to say that the adequacy of Facebook’s privacy controls here is very questionable. Why is it that someone can develop a quiz app and then be able to suck up all of this data not only about the people who take the quiz, but then also of all of their friends? So regardless of what the terms and conditions are when you sign up for Facebook, or what the privacy policy says, I think a lot of people are feeling that this is not reasonable. That’s why Facebook’s response here has rung a bit hollow. They’ve pushed back hard to say, “This is not a data breach. There was no unlawful entry into our system, no passwords were compromised, none of the security measures failed.” But the system was structured to allow, at the time, all of this information to leak out. It was an invitation to come in and take this information. So the original sin in this story is the fact that [a developer] was able to export this much data pertaining to 50 million people.

Second, I think there are some serious questions around the adequacy of Facebook’s response in 2015 and 2016 when there was this exchange with Cambridge Analytica and [its parent company] SCL Group, asking if the data was destroyed. It would be interesting to know what kinds of assurances Facebook sought. Did they simply ask and say, “We want you to destroy it,” [with the response] “O.K., we did it”? Were there any consequences tied to it? Were there any penalties for noncompliance? Once the data was in the hands of Cambridge Analytica and SCL Group, what kind of efforts did Facebook take to fix the situation then? I think that’s a very open question.

There’s an entire other side to this story that I think has not come out, which is that Facebook is between a rock and a hard place. Here’s why: You want Facebook to protect your privacy against the Cambridge Analyticas and other ne’er-do-wells in the world. On the other hand, Facebook is this massive internet platform that in many parts of the world is basically the internet. In sub-Saharan Africa and parts of South Asia, when people think about the internet, they think about Facebook. So there’s a countervailing tension here, which is that you also want to be able to give legitimate app developers access to some of this data to do useful things. So Facebook has a really tough balancing act here.

“I think this is a moment of fundamental reckoning for social media platforms especially, but big tech generally.”

Vivek Krishnamurthy

GAZETTE: Facebook executives say they banned the app used to harvest the data and ordered all copies of the data to be destroyed back in 2015 and have since instituted tighter data safeguards and policies. Only recently, the company claims, did they become aware that Cambridge Analytica did not in fact destroy the data files, a timeline that doesn’t seem to square with the fact that staffers were embedded in the Trump campaign’s digital operation, which Cambridge Analytica’s Nix claims to have secretly designed and run, helping the campaign use the data to target voters with political ads and other content. What kind of liability or exposure could Facebook have, and does it make a difference if they knew about this years ago and didn’t do anything?

Krishnamurthy: I think their liability is going to depend on what the terms of service and privacy policy said at the time that this happened, what kind of guarantees they offered their users, and whether or not they violated any of those guarantees. It’s not entirely well understood by the public that there’s a big difference between a company’s terms of service and its privacy policy. The terms of service are a contract. They’re binding between you and Facebook, so there’s a possibility of recourse to the courts if either party breaches. The privacy policy, by contrast, is usually offered gratuitously. It’s not part of the contract and therefore, not enforceable like a contract.

GAZETTE: In a 2011 settlement with the FTC, Facebook agreed to disclose to users how its information would be shared with third parties, and said it would only do so with the users’ affirmative consent. That order is valid until 2031. How might this data sharing affect that order?

Krishnamurthy: It depends on what they did. It is entirely possible that Facebook did not violate the terms of the FTC consent decree because everything that it did here was within the four corners of the consent decree. This is the argument by Facebook’s deputy general counsel in a blog post, which is, “Look, everyone here consented.” Assuming that they had a good compliance program, and one would hope that they did after being rapped on the knuckles several times in the earlier part of this decade, [then they’ll argue] that “Everything here happened by the book. Everything was done correctly.” Yet the thing still blew up because the systems and policies and procedures weren’t designed to deal with those issues, so that’s Facebook’s potential liability.

GAZETTE: What are the key differences in privacy laws, and how might that affect Facebook going forward?

Krishnamurthy: We have very little affirmative law in the United States that governs privacy. We have HIPAA for health care; we have a statute called the GLBA [Gramm-Leach-Bliley Act] for finance; we have the Video Rental Privacy Act, which was passed in 1988. And then, the provision at the back of it all is “Did you do anything unfair and deceptive,” which is the FTC Act. There’s a federal privacy act in the U.S. that governs the government, but there’s no overarching privacy legislation that covers every aspect of the economy. In Europe there is, and there has been since 1995 (in the European Data Protection Directive).

The U.K. is implementing the European Data Protection Directive and, under that directive, you have a right to go to a company that has your data and say, “Show me the data.” You have a right to demand corrections of data that’s inaccurate. There are some pretty heavy-duty provisions around consent to data collection or to data sharing. If you ever go to the BBC website, for example, there is this little notice that says, “We use cookies. Click here to consent yes or no.” That’s a direct manifestation of the European regulatory regime. To place that cookie on your machine, they need to ask for your consent. That never happens in the United States.

GAZETTE: It’s conceivable that Cambridge Analytica may be shut down, but what do you expect will happen to Facebook? The stock is dropping, and public sentiment has turned sharply against the company for this and other reasons. Is this perhaps an inflection point, a wake-up call of sorts to other platforms and to the tech industry as a whole?

Krishnamurthy: I hope that it is, and I think that it will be, because this is such a big story. First and foremost, Facebook has not done a good job in regard to public relations and messaging. Regardless of what the legal liabilities might be, there needs to be some accountability by Facebook regarding what happened, with clarity in terms of explaining what happened, what they’re going to do about it, etc.

I think this is a moment of fundamental reckoning for social media platforms especially, but for big tech generally. It’s been unrelenting. There’s been story after story after story around things that have gone wrong with regard to the 2016 election and social media. There are a lot of stories that happened around data breaches and data privacy more generally. There’s certainly this theme, and it’s reflected in the media, it’s reflected in scholarly conversations as well, that for the last 20 years, tech was seen as the great hope, as a force for good, a transformative force. And technology companies have had the benefit of the doubt from consumers and regulators, and they kind of got a free ride for the last 20 years. Many would argue that these companies have received preferential regulatory treatment here in the United States and elsewhere for a long time now.

To me, it feels like that honeymoon period is clearly over when it comes to the general public, when it comes to government, when it comes to investors. The fact that people are thinking about the impact of these companies and paying a lot more attention means that the companies are going to have to be a lot more thoughtful and careful.

And this is going to cost them a lot more money. So this easy ride, I think, is ending. To the extent that they continue to survive, and I think they will, they’ll look much more like conventional companies in terms of having a lot more legal and regulatory and public policy capacity. That’s an important part moving forward for them.

This interview has been edited for clarity and length.